I've been playing around with generating detailed overviews of my running Linux system. Existing tools, such as top or htop, do a pretty good job of displaying the processes consuming the highest resources, but I'm looking for an at-a-glance overview of all processes and their impact on the system. I've used this goal as a reason to learn a little more about the python plotting tool matplotlib.

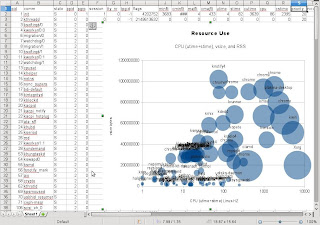

The plot to the right is generated by a matplotlib python script producing something similar to the LibreOffice plot in my previous blog entry. The process-file-system extraction code from the previous blog posting is used to extract CPU, vsize, and RSS data. Unlike the previous example, the script refreshes the plot every few seconds. This new plot uses a hash to pick a colour for each user and also annotates each point with the PID, command, and username.

Plotting every process results in a very cluttered graph To reduce the clutter I have restricted to plot data to the "interesting" processes - those that have changing values. Interest decays with inactivity, so processes that become static eventually disappear from the graph.

I wasn't happy with the bubble plot because clutter made it hard to get an overview of all process activity, which was the whole point of the exercise. Time for a different tack.

- Light grey: no activity

- Red: consumed CPU

- Green: perfomed IO

- Orange: Consumed CPU and performed IO

- Yellow: exited.

Changes in RSS are exaggerated so that even small changes in RSS are visible as a little "throb" in the point size. The plot updates once every three seconds or when ever the space bar is pressed. By grouping by username and start time, the placement of points remains fairly static and predictable from cycle to cycle.

In matplotlib it is relatively easy to trap hover and click events. I've included code to trap hover and display a popup details for the process under the mouse. In the screen-capture above, I've hovered over the Xorg X-Windows server. I also trap right-click and pin the popup so that will remain updating even after the mouse is moved away.

The the number of memory hogs visible on the plot is a bit exaggerated because the plot includes threads as well as processes (threads share memory). This plot is somewhat similar to the Hinton Diagram seen in the matplotlib example pages, which may also be an interesting basis for viewing a collection of processes or servers. (My own efforts pre-dates my discovering the Hinton example, so the implementation details differ substantially.)

Finally, I thought I'd include a 3D plot. I'm not sure it's that useful, but adding an extra dimension is food for thought. The script plots cumulative-CPU and RSS over the duration of the scripts run time. It uses a heuristic to select "interesting" processes. In this particular screen capture I shut down my eclipse+android IDE - first set of terminated lines - and then restarted the same IDE (steep climbing lines). The CPU and memory consumed by the plotting script can be seen as the diagonal line across the bottom time-CPU axis.

Matplotlib is a really useful plotting library, when ever I thought I'd exhausted what was possible, a Google-search would usually prove me wrong.

The code

plot_bubble_cpu_vsize_rss.py

#!/usr/bin/env python

#

# Bubble Plot: cpu (x), vsize(y), rss(z)

#

# Copyright (C) 2011: Michael Hamilton

# The code is GPL 3.0(GNU General Public License) ( http://www.gnu.org/copyleft/gpl.html )

#

import matplotlib

matplotlib.use('GTkAgg')

import pylab

import gobject

import sys

import os

import math

from optparse import OptionParser

import linuxprocfs as procfs

SC_CLK_TCK=float(os.sysconf(os.sysconf_names['SC_CLK_TCK']))

ZOMBIE_LIVES=2 # Not a true UNIX zombie, just a plot zombie.

INTERESTING_DEFAULT=3 # Don't display a process if it is boring this many times in a row.

INTERVAL = 2

_user_colors = {}

def _user_color(username):

if username in _user_colors:

color = _user_colors[username]

else:

# For a username, generate a psuedo random (r,g,b) values in the 0..1.0 range

# E.g. for root base this on hash(root), hash(oot), and hash(ot) - randomise

# each hash further - seems to work better than the straight hash

color = tuple([math.fabs(hash(username[i:]) * 16807 % 2147483647)/(2147483647 * 1.3) for i in (0, 2, 1)])

#color = tuple([abs(hash(username[i:]))/(sys.maxint*1.3) for i in (2, 1, 0)])

_user_colors[username] = color

print username, color

return color

class TrackingData(object):

def __init__(self, info):

self.previous = info

self.current = info

self.color = _user_color(info.username)

self.text = '%d %s %s' % (info.pid, info.username, info.stat.comm )

self.interesting = 0

self.x = 0

self.y = info.stat.vsize

self.z = info.stat.rss / 10

self.zombie_lives = ZOMBIE_LIVES

def update(self, info):

self.current = info

oldx = self.x

oldy = self.y

oldz = self.z

self.x = ((info.stat.stime + info.stat.utime) - (self.previous.stat.stime + self.previous.stat.utime)) /SC_CLK_TCK

self.y = info.stat.vsize

self.z = info.stat.rss / 10

if self.x == oldx and self.y == oldy and self.z == oldz:

if self.interesting > 0:

self.interesting -= 1 # if interesting drops to zero, stop plotting this process

else:

self.interesting = INTERESTING_DEFAULT

self.previous = self.current

self.zombie_lives = ZOMBIE_LIVES

def is_alive(self):

self.zombie_lives -= 1

return self.zombie_lives > 0

class PlotBubbles(object):

def __init__(self, sleep=INTERVAL, include_threads=False):

self.subplot = None

self.datums = {}

self.sleep = sleep * 1000

self.include_threads = include_threads

def make_graph(self):

all_procs = procfs.get_all_proc_data(self.include_threads)

y_list = []

x_list = []

s_list = []

color_list = []

anotations = []

for proc_info in all_procs:

if not proc_info.pid in self.datums:

data = TrackingData(proc_info)

self.datums[proc_info.pid] = data

else:

data = self.datums[proc_info.pid]

data.update(proc_info)

if data.interesting > 0: # Only plot active processes

x_list.append(data.x)

y_list.append(data.y)

s_list.append(data.z)

color_list.append(data.color)

anotations.append((data.x, data.y, data.color, data.text))

if not data.is_alive():

del self.datums[proc_info.pid]

if self.subplot == None:

figure = pylab.figure()

self.subplot = figure.add_subplot(111)

else:

self.subplot.cla()

if len(x_list) == 0: # Nothing to plot - probably initial cycle

return True

self.subplot.scatter(x_list, y_list, s=s_list, c=color_list, marker='o', alpha=0.5)

pylab.xlabel(r'Change in CPU (stime+utime)', fontsize=20)

pylab.ylabel(r'Total vsize', fontsize=20)

pylab.title(r'Process CPU, vsize, and RSS')

pylab.grid(True)

gca = pylab.gca()

for x, y, color, text in anotations:

gca.text(x, y, text, alpha=1, ha='left',va='bottom',fontsize=8, rotation=33, color=color)

pylab.draw()

return True

def start(self):

self.scatter = None

self.make_graph()

pylab.connect('key_press_event', self)

gobject.timeout_add(self.sleep, self.make_graph)

pylab.show()

def __call__(self, event):

self.make_graph()

if __name__ == '__main__':

usage = """usage: %prog [options|-h]

Plot change in CPU(x), total vsize(y) and total RSS.

"""

parser = OptionParser(usage)

parser.add_option("-t", "--threads", action="store_true", dest="include_threads", help="Include threads as well as processes.")

parser.add_option("-s", "--sleep", type="int", dest="interval", default=INTERVAL, help="Sleep seconds for each repetition.")

(options, args) = parser.parse_args()

PlotBubbles(options.interval, options.include_threads).start()

% python plot_bubble_cpu_vsize_rss.py -h

Usage: plot_bubble_cpu_vsize_rss.py [options|-h]

Plot change in CPU(x), total vsize(y) and total RSS.

Options:

-h, --help show this help message and exit

-t, --threads Include threads as well as processes.

-s INTERVAL, --sleep=INTERVAL

Sleep seconds for each repetition.

plot_grid.py

#!/usr/bin/env python

#

# Copyright (C) 2011: Michael Hamilton

# The code is GPL 3.0(GNU General Public License) ( http://www.gnu.org/copyleft/gpl.html )

#

import matplotlib

matplotlib.use('GTkAgg')

import pylab

import time

import gobject

import math

import os

import linuxprocfs as procfs

from optparse import OptionParser

SC_CLK_TCK=float(os.sysconf(os.sysconf_names['SC_CLK_TCK']))

LIMIT=1000

INTERVAL=3

DEFAULT_COLS=30

BASE_POINT_SIZE=300

MIN_SIZE=20

ZOMBIE_LIVES=3 # Not a true UNIX zombie, just a plot zombie

MAX_RSS = 10

# Don't spell colour two ways - conform with pylab

DEFAULT_COLORS=['honeydew','red','lawngreen','orange','yellow','lightyellow','white']

class ProcessInfo(object):

def __init__(self, new_procdata):

self.data = new_procdata

self.previous = None

self.alive = True

self.info = '%d %s %s' % (new_procdata.pid, new_procdata.username, new_procdata.stat.comm )

self.username = new_procdata.username

self.color = 'blue'

self.zombie_lives = ZOMBIE_LIVES

def update(self, new_procdata):

self.previous = self.data

self.data = new_procdata

self.zombie_lives = ZOMBIE_LIVES

def report(self):

return '%s\nstate=%s\nutime=%f\nstime=%f\nrss=%d\nreads=%d\nwrites=%d' % \

(self.info,

self.data.status.state if not self.is_zombie() else 'exited',

self.data.stat.utime/SC_CLK_TCK,

self.data.stat.stime/SC_CLK_TCK,

self.data.stat.rss,

self.data.io.read_bytes,

self.data.io.write_bytes)

def is_alive(self):

self.zombie_lives -= 1

return self.zombie_lives > 0

def is_zombie(self):

return self.zombie_lives < ZOMBIE_LIVES - 1

def sort_key(self):

return (self.username, self.data.stat.starttime, self.data.pid)

class Activity_Diagram(object):

def __init__(self, sleep=INTERVAL, max_cols=DEFAULT_COLS, point_size=BASE_POINT_SIZE, include_threads=True, colors=''):

self.process_info = {}

self.pos_index = {}

self.subplot = self.label = self.hover_tip = self.hover_tip_data = None

self.hover_tip_sticky = False

self.include_threads = include_threads

self.sleep = sleep

self.max_cols = max_cols

self.point_size = point_size

# Extend colors to same length as default, merge together colors and default, choose non blank values

self.normal_color, self.cpu_color, self.io_color, self.cpuio_color, self.exit_color, self.tip_color, self.bg_color = \

[ (c if c != '' and c != None else d) for c,d in map(None, colors, DEFAULT_COLORS)]

def start(self):

self.start_time = time.time()

self._create_new_plot()

pylab.connect('motion_notify_event', self)

pylab.connect('button_press_event', self)

pylab.connect('key_press_event', self)

gobject.timeout_add(self.sleep * 1000, self._create_new_plot)

pylab.show()

def _create_new_plot(self):

if self.subplot == None:

figure = pylab.figure()

figure.set_frameon(True)

self.subplot = figure.add_subplot(111, axis_bgcolor=self.bg_color)

else:

self.subplot.cla()

plot = self.subplot

x_vals, y_vals, c_vals, s_vals, namelabels = self._retrieve_data()

plot.scatter(x_vals, y_vals, c=c_vals, s=s_vals) #, label=data.info)

plot.axis('equal')

plot.set_xticks([])

plot.set_yticks(namelabels[0])

plot.set_yticklabels(namelabels[1], stretch='condensed')

self._create_tip(plot) # Refresh the tip the user is currently looking at

pylab.title('Activity: size=RSS changes; red=CPU; green=IO; orange=CPU and IO.')

pylab.draw()

#print "ping"

return True

def _retrieve_data(self):

# Update from procfs

for proc in procfs.get_all_proc_data(include_threads=self.include_threads):

if not proc.pid in self.process_info:

data = ProcessInfo(proc)

self.process_info[proc.pid] = data

else:

data = self.process_info[proc.pid]

data.update(proc)

max_rss = 0

for info in self.process_info.values():

if max_rss < info.data.stat.rss:

max_rss = info.data.stat.rss

# Compute values for plotting

x_vals = []; y_vals = []; c_vals = []; s_vals = []

usernames = [[],[]]

col = 0; row = 0

self.pos_index = {}

previous = None

for info in sorted(self.process_info.values(), key=lambda info: info.sort_key()):

if not info.is_alive():

del self.process_info[info.data.pid]

else:

if (not previous or previous.username != info.username):

if col != 0:

col = 0; row += 1

print info.username, -row

usernames[0].append(-row)

usernames[1].append(info.username)

self.pos_index[(col,-row)] = info

x_vals.append(col)

y_vals.append(-row) # Invert ordering

c_vals.append(self._decide_color(info))

s_vals.append(self._decide_size(info, max_rss))

col += 1

if col == self.max_cols:

col = 0; row += 1

previous = info

return (x_vals, y_vals, c_vals, s_vals, usernames)

def _decide_color(self,info):

if info.is_zombie():

return self.exit_color

if info.previous == None:

delta_cpu = delta_io = 0

else:

delta_cpu = (info.data.stat.utime + info.data.stat.stime) - (info.previous.stat.utime + info.previous.stat.stime)

delta_io = (info.data.io.read_bytes + info.data.io.write_bytes) - (info.previous.io.read_bytes + info.previous.io.write_bytes)

color = self.normal_color

if delta_io > 0:

color = self.io_color

if delta_cpu > 0 or info.data.stat.state == 'R':

if delta_io > 0:

color = self.cpuio_color

else:

color = self.cpu_color

return color

def _decide_size(self, info, max_rss):

rss = info.data.stat.rss

delta_rss = rss - (info.previous.stat.rss if info.previous else rss)

# A relative proportion of the base dot size

size = max(MIN_SIZE, self.point_size * rss / max_rss)

if delta_rss > 0: # Temporary throb to indicate change

size += max(20,size/4)

elif delta_rss < 0:

size -= max(20,size/4)

return size

def _create_tip(self, axes, x=None, y=None, data=None, toggle_sticky=False):

if data:

if not self.hover_tip_sticky or toggle_sticky:

if self.hover_tip: self.hover_tip.set_visible(False)

self.hover_tip = axes.text(x, y, data.report(), bbox=dict(facecolor=self.tip_color, alpha=0.85), zorder=999)

self.hover_tip_data = (x,y,data)

if toggle_sticky: self.hover_tip_sticky = not self.hover_tip_sticky

elif self.hover_tip_data:

x,y,data = self.hover_tip_data

self.hover_tip = axes.text(x, y, data.report(), bbox=dict(facecolor=self.tip_color, alpha=0.85), zorder=999)

def _clear_tip(self):

if self.hover_tip and not self.hover_tip_sticky:

self.hover_tip.set_visible(False) # will be free'ed up by next plot draw

self.hover_tip = self.hover_tip_data = None

def __call__(self, event):

#print event.name

if event.name == 'key_press_event':

self._create_new_plot()

else:

if (event.name == 'motion_notify_event' or event.name == 'button_press_event') and event.inaxes:

point = (int(event.xdata + 0.5), int(event.ydata - 0.5))

if point in self.pos_index: # On button click let tip stay open without hover

self._create_tip(event.inaxes, event.xdata, event.ydata, self.pos_index[point], event.name == 'button_press_event')

else:

self._clear_tip()

pylab.draw()

if __name__ == '__main__':

usage = """usage: %prog [options|-h]

Plot RSS, CPU and IO, with hover and click for details.

RSS size is plotted as a circle - the circle will temporarily

jump up and down in size to indicate a growing or shrinking

RSS - the steady state size is a relative size indicator.

"""

parser = OptionParser(usage)

parser.add_option("-p", "--no-threads", action="store_true", dest="no_threads", help="Exclude threads, only show processes.")

parser.add_option("-s", "--sleep", type="int", dest="interval", default=INTERVAL, help="Sleep seconds for each repetition.")

parser.add_option("-n", "--columns", type="int", dest="columns", default=DEFAULT_COLS, help="Number of columns in each row (maximum).")

parser.add_option("-d", "--point-size", type="int", dest="point_size", default=BASE_POINT_SIZE, help="Dot point size (expressed as square area).")

parser.add_option("-c", "--colors", type="string", dest="colors", default='',

help="Colors for normal,cpu,io,cpuio,exited,tip,bg comma separated. " +

"Only supply the ones you want to change e.g. -cwhite,,blue - " +

" Defaults are " + ','.join(DEFAULT_COLORS))

(options, args) = parser.parse_args()

Activity_Diagram(options.interval, options.columns, options.point_size, not options.no_threads, options.colors.split(',')).start()

% python plot_grid.py -h

Usage: plot_grid.py [options|-h]

Plot RSS, CPU and IO, with hover and click for details.

RSS size is plotted as a circle - the circle will temporarily

jump up and down in size to indicate a growing or shrinking

RSS - the steady state size is a relative size indicator.

Options:

-h, --help show this help message and exit

-p, --no-threads Exclude threads, only show processes.

-s INTERVAL, --sleep=INTERVAL

Sleep seconds for each repetition.

-n COLUMNS, --columns=COLUMNS

Number of columns in each row (maximum).

-d POINT_SIZE, --point-size=POINT_SIZE

Dot point size (expressed as square area).

-c COLORS, --colors=COLORS

Colors for normal,cpu,io,cpuio,exited,tip,bg comma

separated. Only supply the ones you want to change

e.g. -cwhite,,blue - Defaults are

honeydew,red,lawngreen,orange,yellow,lightyellow,white

plot_cpu_vsize_time_line_3d.py

#!/usr/bin/env python

#

# Plot a limited time line in 3D for CPU and RSS

#

# Copyright (C) 2011: Michael Hamilton

# The code is GPL 3.0(GNU General Public License) ( http://www.gnu.org/copyleft/gpl.html )

#

import matplotlib

matplotlib.use('GTkAgg')

import pylab

import time

import gobject

import gtk

import random

import string

import math

import mpl_toolkits.mplot3d.axes3d as axes3d

from optparse import OptionParser

import linuxprocfs as procfs

HZ=1000.0

LIMIT=1000

INTERVAL=5

ZOMBIE_LIVES=5

MAX_INTERESTING=5.0

class ProcessInfo(object):

def __init__(self, new_procdata):

self.base = new_procdata

self.previous = new_procdata

self.data = new_procdata

self.text = '%d %s %s' % (new_procdata.pid, new_procdata.username, new_procdata.stat.comm )

self.color = '#%6.6x' % (((self.base.pid * 16807 % 2147483647)/(2147483647 * 1.3)) % 2**24 )

self.color = tuple([(self.base.pid * i * 16807 % 2147483647)/(2147483647 * 1.3) for i in (7, 13, 23)])

self.xvals = []

self.yvals = []

self.zvals = []

self.zombie_lives = ZOMBIE_LIVES

self.interesting = 0

self.visible = False

def update(self, new_procdata):

self.previous = self.data

self.data = new_procdata

self.zombie_lives = 5

x = self.data.time_stamp

y = ((self.data.stat.utime - self.base.stat.utime) + (self.data.stat.stime - self.base.stat.stime)) / HZ

z = float(new_procdata.stat.rss)

if len(self.xvals) > LIMIT:

del self.xvals[0]

del self.yvals[0]

del self.zvals[0]

self.xvals.append(x)

self.yvals.append(y)

self.zvals.append(z)

if self.data.stat.utime - self.previous.stat.utime > 10.0 or self.data.stat.stime - self.previous.stat.stime > 10.0 or abs(self.data.stat.rss - self.previous.stat.rss) > 15000:

self.visible = True

self.interesting = MAX_INTERESTING

class Activity_Diagram(object):

def __init__(self, remove_dead=True):

self.process_info = {}

self.start_time = None

self.subplot = None

self.remove_dead = remove_dead

def make_graph(self):

for proc in procfs.get_all_proc_data(include_threads=True):

if not proc.pid in self.process_info:

data = ProcessInfo(proc)

self.process_info[proc.pid] = data

else:

data = self.process_info[proc.pid]

data.update(proc)

if self.subplot == None:

figure = pylab.figure()

self.subplot = figure.add_subplot(111, projection='3d')

else:

self.subplot.cla()

for pid, data in self.process_info.items():

if data.zombie_lives <= 0 and self.remove_dead:

del self.process_info[pid] # dead for a while now - remove

data.zombie_lives -= 1

if data.visible:

if len(data.xvals) > 0:

alpha =data.interesting / MAX_INTERESTING # boring processes fade away

self.subplot.plot(data.xvals, data.yvals, data.zvals, color=data.color, alpha=alpha, linewidth=2)

self.subplot.text(data.xvals[-1], data.yvals[-1], data.zvals[-1], data.text, alpha=alpha, fontsize=6, color = data.color if data.zombie_lives >= ZOMBIE_LIVES - 1 else 'r')

data.interesting -= 1 if data.interesting > 2 else 0 # Losing interest

self.subplot.set_xlabel(r'time', fontsize=10)

self.subplot.set_ylabel(r'cpu', fontsize=10)

self.subplot.set_zlabel(r'rss', fontsize=10)

pylab.title('Cumulative CPU and RSS over %f minutes' % ((time.time() - self.start_time) /60.0))

pylab.draw()

return True

def start(self, sleep_secs=INTERVAL):

self.start_time = time.time()

self.make_graph()

pylab.connect('key_press_event', self)

gobject.timeout_add(INTERVAL*1000, self.make_graph)

pylab.show()

def __call__(self, event):

self.make_graph()

def boo():

print 'boo'

if __name__ == '__main__':

usage = """usage: %prog [options|-h]

Plot cumulative-CPU and RSS over the script run time.

"""

parser = OptionParser(usage)

parser.add_option("-s", "--sleep", type="int", dest="sleep_secs", default=INTERVAL, help="Sleep seconds for each repetition.")

(options, args) = parser.parse_args()

Activity_Diagram(remove_dead=False).start(sleep_secs=options.sleep_secs)

% python plot_cpu_rss_time_lines_3d.py -h

Usage: plot_cpu_rss_time_lines_3d.py [options|-h]

Plot cumulative-CPU and RSS over the script run time.

Options:

-h, --help show this help message and exit

-s SLEEP_SECS, --sleep=SLEEP_SECS

Sleep seconds for each repetition.

Notes

Even if you don't know any python, you can still have a play by running these scripts from the command line. Just save them into a folder giving them the appropriate file-names and also save the linuxprocfs.py from my previous post. Make sure you've installed python-matplotlib for you distribution of Linux (it's a standard offering for openSUSE, so it's in the repo).

Consult the notes from the previous blog entry for some notes on the Linux process file-system.

The matplotlib Web-site ( http://matplotlib.sourceforge.net/ ) contains plenty of documentation and examples, plus Google will track down heaps more advice and examples.

This blog page uses SyntaxHighlighter by Alex Gorbatchev. You can easily copy and paste a line-number free version of the code by selecting view-source icon in the mini-tool-bar that appears on the top right of the source listing (if javascript is enabled).